Can computers help teachers assess open-ended questions?

At the start of the last academic year, colleagues at Bolton College embarked on a tentative journey to discover if a computer could be trained to support teachers to assess student responses to an open-ended question; and if real-time feedback improved the quality of student work when responding to such a question. We have discovered that if we make use of natural language classification, natural language understanding and other tools a computer can indeed be taught to analyse and assess responses to an open-ended question. It is also possible to offer textual and graphical real-time feedback to students. Our work also enables teachers to create multiple classification models that can be used to support the formative assessment of numerous open-ended questions.

Our initial trials have demonstrated that the quality of student work improves when their work is mediated by a computer. We recognise that larger trials need to be undertaken to ascertain if real-time feedback informs and improves the quality of student responses to open ended formative assessment activities.

The emergence of this new assessment tool enables teachers to make use of a richer medium for assessing their students. Traditionally, online formative assessment activities are undertaken using closed questioning techniques such as yes/no questions, multiple-choice questions or drag-and-drop activities. Whilst valuable, this is a rather narrow way to undertake formative assessment. Our solution enables teachers to pose open-ended questions which can be automatically analysed and assessed by a computer. The ability to offer real-time feedback means that students can qualify and clarify their responses.

It is important to note that teachers play an important role. They train the classification models that underpin the open-ended questions that they want to present to their students. Teachers may also welcome the fact that the accuracy of the classification models improve as more students engage with each open-ended question and as the volume of training data rises.

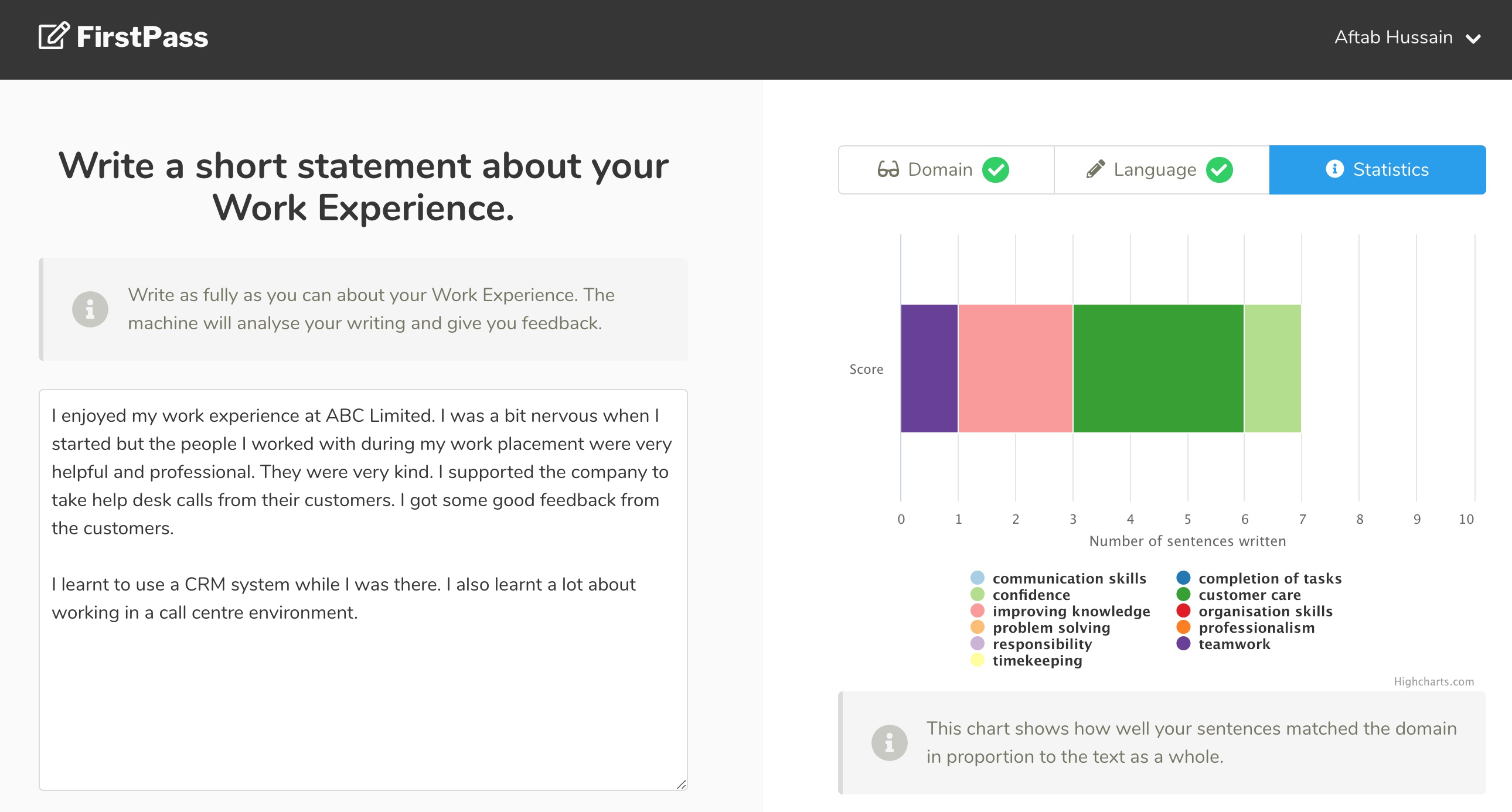

The following image shows one of the feedback screens that is presented to students when they respond to an open-ended question. In this instance, students are asked to write a short summary or evaluation of their time spent whilst on a recent industry placement. The text is analysed against the set of natural language classifiers that a teacher has created for the task. The computer then does a simple count and presents the information in the form of a chart to the student. The chart is designed to support students to identify the elements were additional information is required. Students who have tested the service welcome its ability to offer real-time feedback.

So, can computers support teachers to assess open-ended questions? Our work indicates that they can. However, at the present moment in time, they fall short of matching a teacher when it comes to assessing a student's response to an open-ended question. The vagaries of the written or spoken word imposes certain limits on the computer's ability to support the formative assessment of open-ended questions. If we keep this mind, we can still allocate a specific role to the computer when supporting the assessment process. For instance, the natural language classifcation model that underpins each open-ended question is good at analysing a student's answer against a broad range of anticipated responses. This means that the computer can support the student to identify parts of the answer that require further improvement or development. In this example, the use case is a narrow one. Nevertheless, it addresses a very practical problem that is faced by teachers when they assess a student's answer.